Beyond the Agent Hype: The Real Progression of Enterprise AI

From Automations to Agentic Systems: Understanding the Spectrum

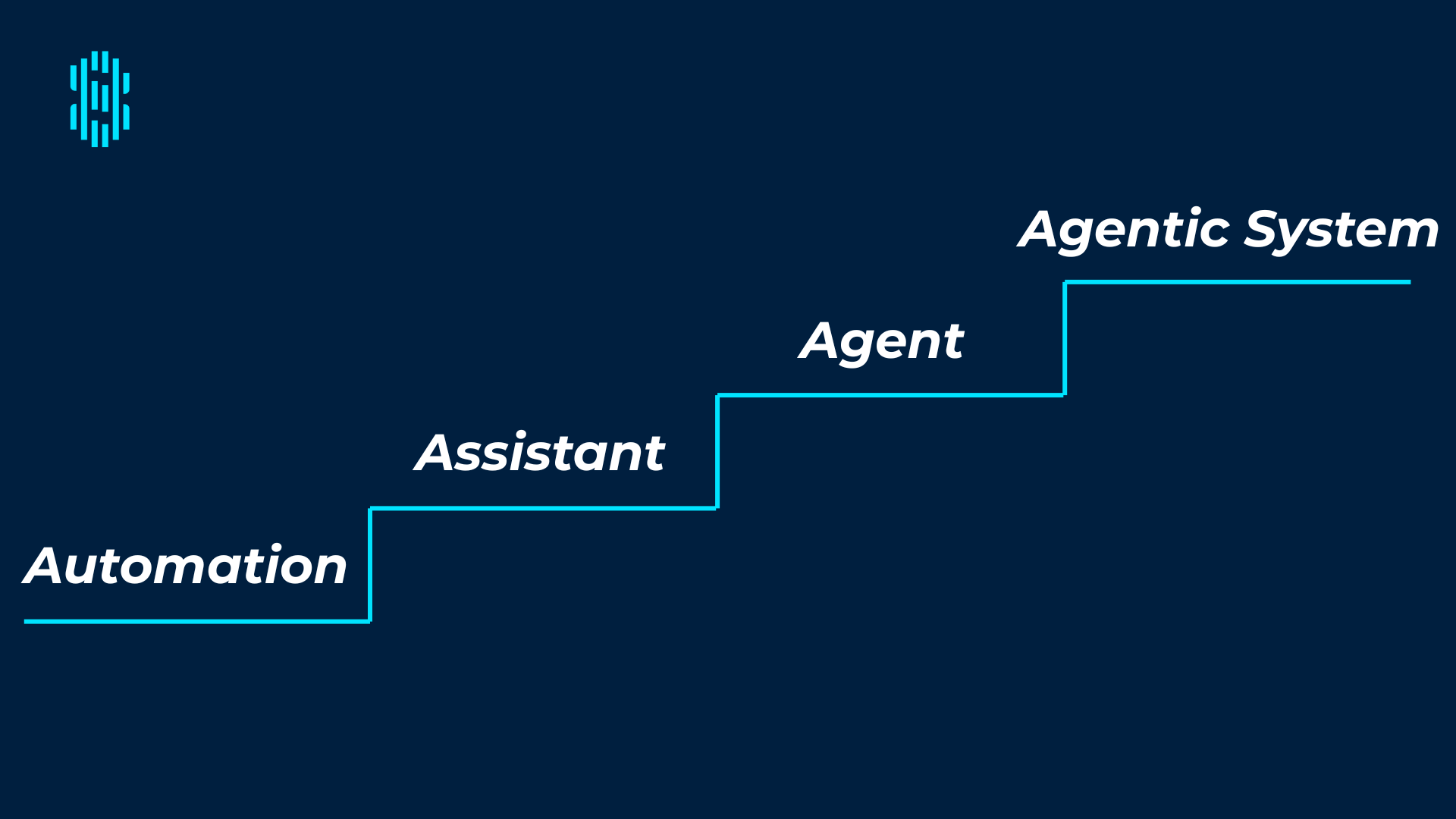

Recent enterprise discussions reveal something striking about how companies actually deploy AI at scale. It's not the single, all-powerful agent that vendors are selling. It's a careful progression through four distinct patterns, each building on the last.

The companies getting real value from AI aren't jumping straight to autonomous agents. They're moving methodically from simple automations to assistants to single agents to orchestrated agentic systems. Each step requires different infrastructure, governance and skills. Skip steps, and you create expensive failures. Follow the progression, and you build sustainable AI capability.

Here's what that progression actually looks like in practice.

The Three Core Patterns (Plus One)

Think of AI implementation not as one monolithic system, but as a spectrum of tools, each designed for specific types of work.

Automations handle your repetitive, predictable tasks. They're the workhorses that run the same steps reliably every time. A finance team sets up a workflow that generates daily sales reports at 9am sharp. No thinking required, just consistent execution. These systems are cheap, fast and predictable.

Assistants amplify human judgment and creativity. You stay in control while the AI accelerates your work. Picture drafting a critical client email: you provide the context and goal, the assistant drafts three versions in your voice, flags potentially risky phrasing and suggests subject lines. You review, adjust and send. The AI speeds you up without removing your judgment or accountability.

Agents operate autonomously across multiple systems. They plan, decide and act with minimal human input. At a recent AI Tinkerers event at the Baidu offices in Hong Kong, I showed an AI-native CRM that could log client calls, update deal stages, write weekly sales wraps and soon will handle prospecting emails independently. That's an agent orchestrating across multiple systems, not just generating text.

But here's what most discussions miss: at enterprise scale, a fourth pattern emerges.

Agentic systems orchestrate multiple specialized agents, each handling distinct subtasks. Think of the evolution like building a team. You start with one helpful intern (assistant), graduate to an autonomous worker (single agent), then scale to a small team with a supervisor (agentic system). Each level demands different capabilities, oversight and investment.

Notice how the value escalates, but so does the complexity. The more autonomous the system, the more critical it becomes to pick the right use case.

Why This Progression Matters Now

The hype around AI agents in 2025 is deafening. They're being pitched as the magic solution that can run whole parts of your business on autopilot. VCs are pouring billions into agent startups. Every tech conference features demos of autonomous systems handling complex workflows.

But this excitement is creating dangerous confusion. Many leaders treat assistants and agents as interchangeable. They're not. An assistant helps you work; an agent works for you. This confusion is causing companies to deploy complex agent systems for simple automation tasks, burning resources and creating unnecessary risk.

Others assume traditional automations are obsolete in the age of AI. Wrong again. For predictable, repetitive workflows, a simple automation remains the cheapest, fastest and most reliable option. You don't need a reasoning engine to move files from folder A to folder B.

There's also a growing myth that agents will replace everything. They won't. Not yet, and probably not for years. The companies succeeding with AI right now aren't betting everything on agents. They're matching the right pattern to the right problem.

Understanding the Enterprise Reality

This supervisor-to-subagents model mirrors how real teams operate. You wouldn't ask one person to research market trends, write code, test systems, deploy to production and draft release notes without any oversight or specialization. The same logic applies to AI systems.

Enterprise architects are discovering that successful agentic systems follow predictable patterns. A supervisor agent breaks down user requests into subtasks. Specialist subagents handle focused jobs like data retrieval, analysis, drafting or approval. Each brings its own tools and returns results for validation before the workflow continues.

This isn't theoretical. Companies deploying these patterns report that the discipline of thinking through agent specialization forces better system design overall. It's easier to debug, monitor and improve a system where each component has a clear, bounded responsibility.

When to Use Each Pattern: A Decision Framework

The old way assumes agents are always the answer. The new way matches the tool to the task with surgical precision.

Choose automation when you have fixed, deterministic workflows where steps never change. Your workflow needs strict low-latency performance with zero tolerance for errors. You must prove and explain every step for compliance or audit requirements. The task is simple repetition without conditional logic.

Choose assistants when human judgment remains central to the work. You need to maintain accountability for decisions and outputs. The work involves creativity, nuance or stakeholder management. Speed matters more than full automation.

Choose single agents when you face a large problem space with lots of variation. The task requires multi-hop reasoning with low marginal cost per iteration. High payoff justifies the complexity if solved well. Your business already has APIs and tools that need orchestration.

Choose agentic systems when the job naturally decomposes into specialist subtasks. Multiple systems need coordination across departments. The business impact justifies the additional oversight infrastructure. You have the maturity to manage agent-to-agent handoffs.

The Hidden Economics of Agent Deployment

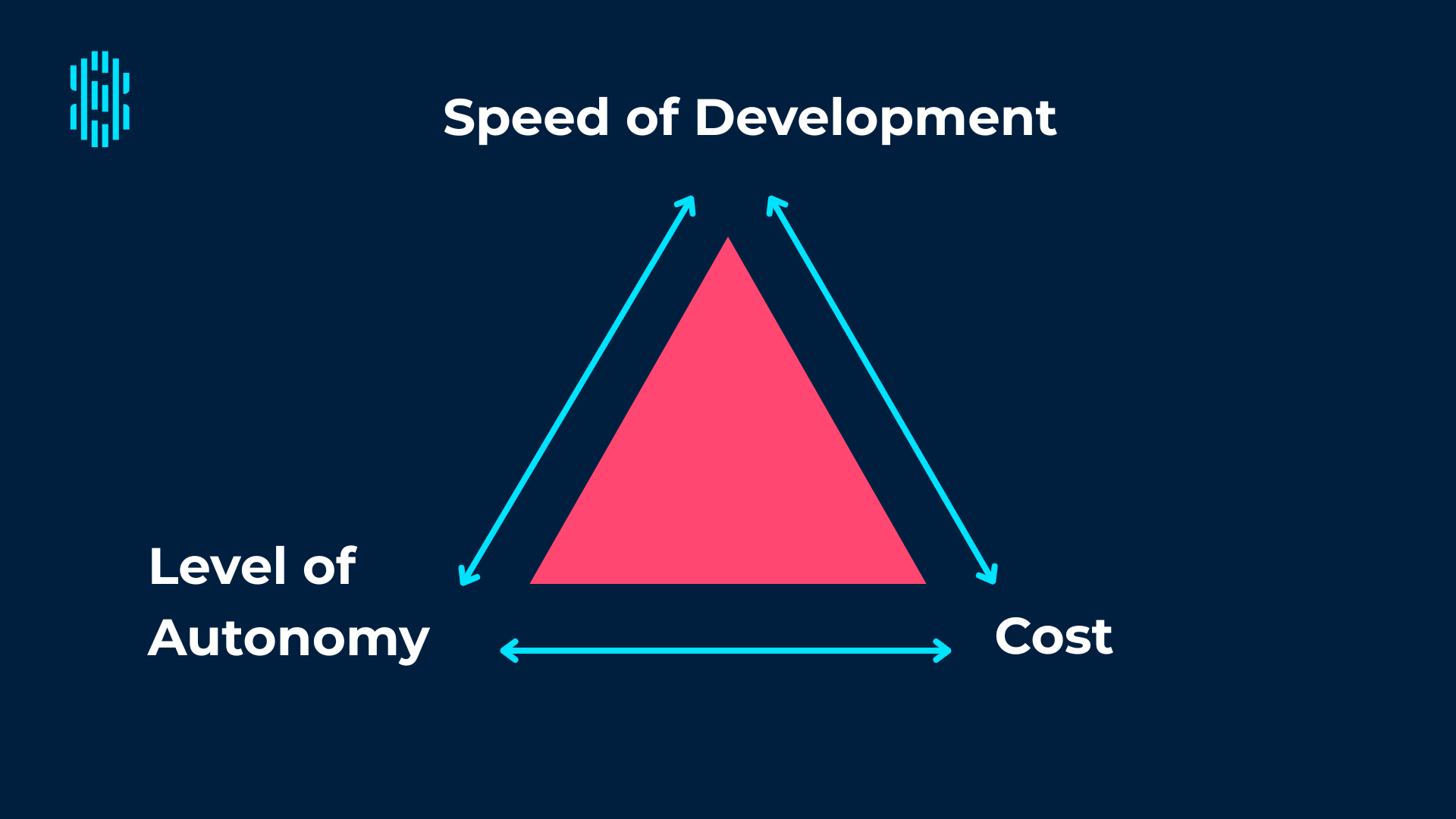

There's a triangle between speed of deployment, level of autonomy and cost. You rarely get all three.

If you need something tomorrow, start with an assistant or automation. These patterns have proven frameworks, established best practices and predictable costs. You can have a working system in days, not months.

If you need high autonomy, budget for significant infrastructure. Tool development, evaluation frameworks, guardrails and monitoring systems all require investment. Small inefficiencies compound quickly when agents make thousands of decisions daily.

Successful agent deployments follow a clear pattern: they start small with a single, well-bounded use case where success metrics are crystal clear. Time saved, accuracy improved, revenue generated. Not unclear, undefined objectives.

Building Blocks of Successful Agents

When you do need an agent, understanding the architecture helps you spot potential failure points early.

Every useful agent shares four essential components. The LLM brain provides reasoning capability, but it's just one piece. Tools and connectors (increasingly standardized through protocols like MCP, or Model Context Protocol) enable agents to interact with your existing systems. Memory systems maintain both short-term context for current tasks and long-term learning from past interactions. Personas and guardrails define operating boundaries, from tone and style to hard limits on what actions the agent can take.

Missing any of these components creates a brittle system. Too many companies focus exclusively on the LLM while neglecting the connective tissue that makes agents actually useful in production.

The Skills Your Team Actually Needs

Agentic AI doesn't replace people. It multiplies your best people when you develop two distinct skill sets within your organization.

AI Users work in a one-prompt, one-response pattern. They own the task and maintain judgment over outputs. They need foundational skills: design thinking to frame problems correctly, prompt engineering to communicate effectively with AI, critical thinking to evaluate outputs and change management to adapt workflows.

AI Managers oversee multiple agents making independent decisions. They set goals, monitor performance and handle exceptions. This role requires advanced capabilities: directing AI toward business objectives, establishing quality control systems, monitoring performance metrics and ensuring compliance with safety requirements.

The companies succeeding with agents are the ones who've thoughtfully developed these operational capabilities across their teams.

Common Failure Modes and How to Avoid Them

Unbounded scope kills more agent projects than any technical limitation. Agents wander without clear boundaries. Successful teams define job stories with specific inputs, outputs and "done" criteria before writing a single line of code.

Tool spaghetti emerges when teams add connectors without architecture. Too many brittle integrations create cascading failures. The solution: centralize tools behind an orchestrator, version everything and log every call for debugging.

Quality drift appears weeks after a successful launch. Performance gradually decays as real-world usage diverges from test scenarios. Prevention requires evaluation datasets, regular spot-checks and automated rollback triggers.

Hidden costs compound faster than expected. Each tool call, retrieval and retry adds marginal cost that becomes substantial at scale. Smart teams budget per task, cap retry attempts and aggressively cache results.

Accountability blur creates organizational paralysis. When an agent makes a mistake, who takes responsibility? Every agentic workflow needs a named human owner who signs off on decisions and handles edge cases.

Your Next Steps: A Practical Path Forward

Look at your own workflows through this new lens. Where are the repetitive steps that could be automated today with simple, reliable tools? Where do you spend hours on drafts, research or analysis that an assistant could accelerate while you maintain control? Which complex, multi-system processes could genuinely benefit from an agentic approach, and do they justify the added complexity?

Add one more critical filter: what level of autonomy can you safely support with your current infrastructure? If you don't have monitoring, evaluation and audit capabilities in place, start smaller. Build confidence through controlled experiments. Scale with data, not hope.

The companies winning with AI aren't the ones deploying agents everywhere. They're the ones thoughtfully matching patterns to problems, building operational excellence and measuring real business impact at every step.

The Question That Changes Everything

The next time someone pitches you an AI solution, don't ask "How advanced is your agent?"

Ask instead: "Is this an automation, an assistant, an agent, or an agentic system problem?"

Get the answer wrong, and you'll waste months building the wrong solution. Get it right, and you'll be among the few actually delivering value while others chase complexity.

The real competitive advantage in 2025 isn't having the most sophisticated agents. It's knowing exactly when to use each pattern in the progression.